My task was to extract pitch values from a long list of audio files. Previously I used Praat and R for this task but looping in R was rather slow so I wanted to find another solution. The following analysis was developed on Linux (Ubuntu).

Firstly, aubio (CLI-only Python tool) was used to extract pitch from wav files. aubio has fewer arguments than Praat and it returned awkward values using default settings so I didn’t explore it further. The good thing about it is that it is easy to use and is relatively simple and Python-native. To extract pitch with aubio use:

sudo apt install aubio-tools

aubiopitch -i P17_trim_short_10.000-11.150.wavEventually I decided to stick to Praat, which is the workhorse of phonetics and can be used from the command line.

Praat saves all commands that are executed and this can be a great start for creating a script. More information about scripting in Praat is here. My solution is here:

This script will extract .pitch files from all .wav files in the working directory and will save them to a subfolder. Praat scripts can be called from the command line:

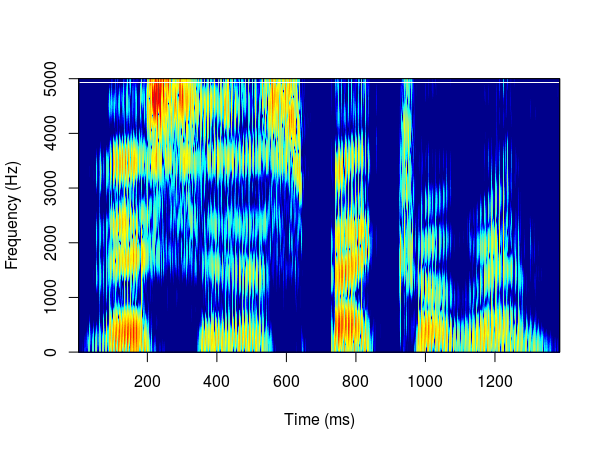

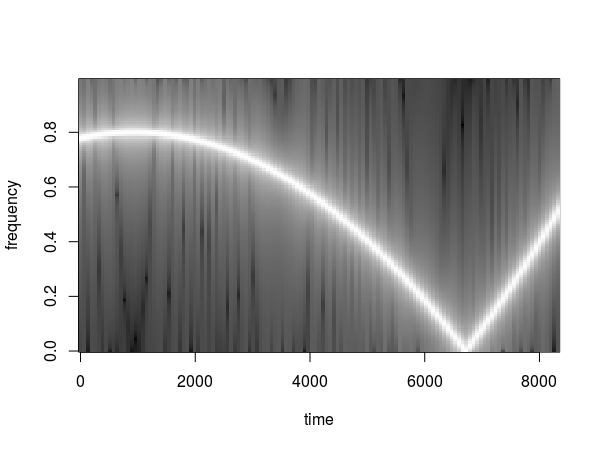

praat --run extract_pitch_script.praatWhich will extract pitch tracks from all .wav files in the directory. The pitch extraction will use default settings in Praat. The output will be one .pitch file for each .wav file. The files themselves contain all candidates and are not in a tidy format so they have to be transformed. This step could probably be done in Praat scripting but I did not have patience to achieve it there and I moved to R which could easily produce desired output.

R can be called from the command line using littler. Shebang on the first line means that the script can be called from the command line. The script below transforms .praat files into clean .csv files.

To invoke the R script, run in the command line:

r praat_pitch_analysis_CLI.R untitled_script.pitchThis creates a .csv file with the best candidate pitch above a certain confidence threshold. Pitch extraction algorithm used by Praat was developed by Boersma (1993).